Extract and manage millions of data points at scale in a few clicks and less time. Take your business and research to the top level with our data science automation and AI-powered no-code and low-code big-data web scraping service and tools.

Embrace technology democratisation through intuitive and user-friendly data extraction designed for users of all skill levels.

Get ready for a prompt-centred chatbot-based User Interface and a VUI for human-spoken interaction with our data infrastructure and services.

Become an early adopter and get exclusive offers. Join our waiting list.

WebRobot for Africa: AI, Big-Data & Web Scraping

We collaborate with the Mistahou Financial Group to bring AI and Big-Data innovations to Africa.

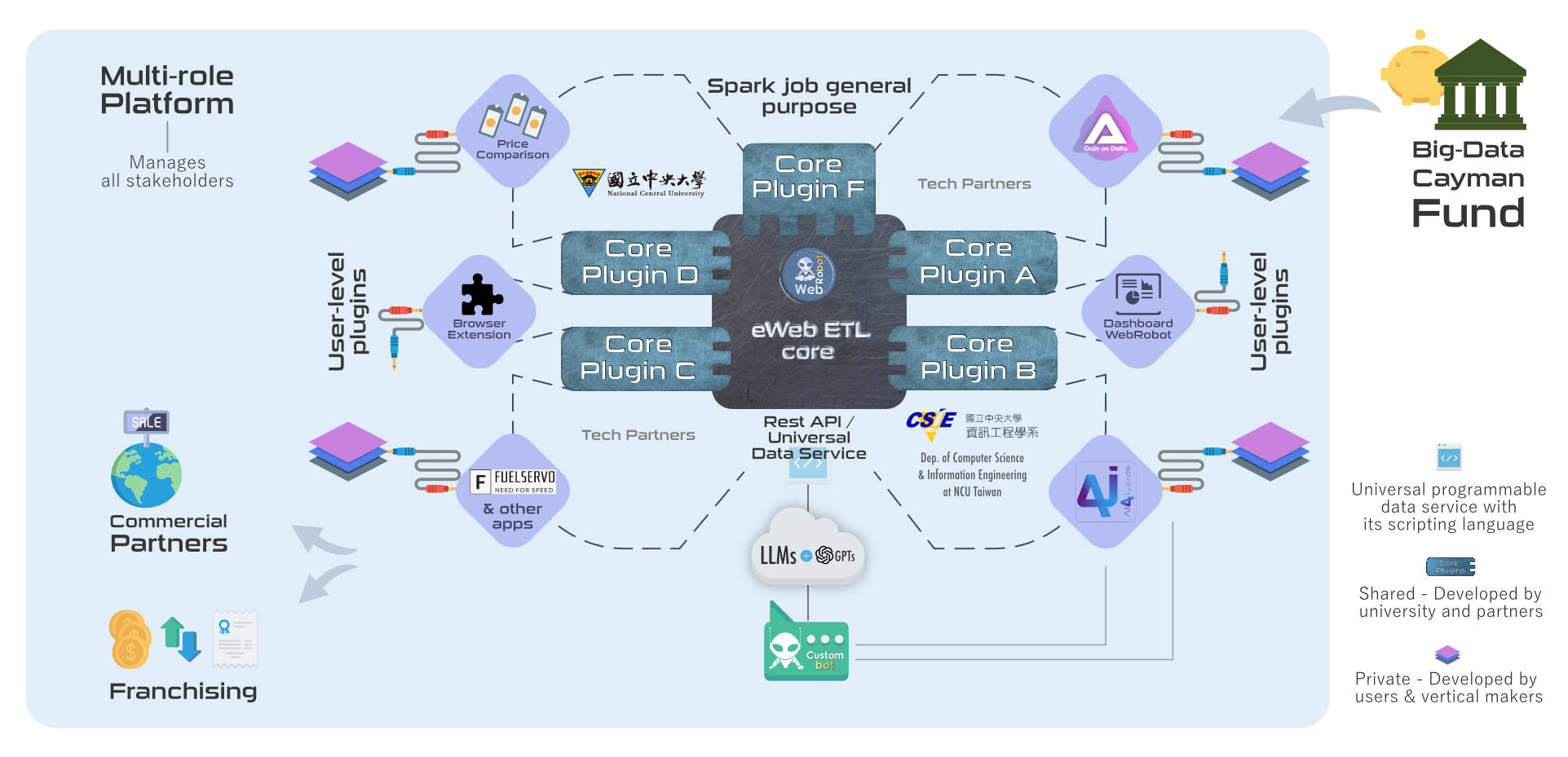

WebRobot Ecosystem: Scalable, extendable, franchisable

From eWeb ETL to global distribution - how everything is connected

We partnered with the National Central University to create the ultimate extendable ETL data platform for top applications and global distribution.

WebRobot for Asia: R&D, University & Innovation

What You Get

Premium big-data web scraping features for top business results.

Collect only relevant and up-to-date info. Get data always when you need them to increase your sales and profit.

Millions of data in less time

Up to 300,000+ dynamic web pages extracted automatically per hour.

Save Hundreds of Dollars

Our self-service low-code, no-code, and AI-assisted visual web scraping tool doesn’t require technical skills (advanced mode also available).

Better data at the right time

Set you datasets delivery frequency to meet your needs.

Updated info = No mistakes

Stay ahead: collect data when they change.

Who is it for

Millions of businesses worldwide

who can benefit FROM our big-data web scraping

Data is the key to success. Big data is the key to market leadership. Everyone needs data to reach their goal.

Discover all the use cases and how to profit from data.

Scrape the web and extract your data without knowing all the technical stuff. Tell us your needs and objectives and we design the best data extraction solution for you.

Would you like to pay just for what you need? Our plans are modulated based on the number of records and scrapers* (websites you need to extract the data from).

But whatever package you choose, we always offer the same top quality. We use our high-performance Spark-based big-data extraction engine to deliver the best datasets and results.

Every plan also includes:

up to 10 Million (more on request)

from 1 to 30 (more on request)

daily, weekly, biweekly, monthly

¹ You can purchase new complete plans, additional records packs or additional scrapers (websites) packs to add to your current plan. Adding just records won’t charge you for any setup or maintenance costs (already included in the plans you already have). Adding new scrapers means you will be charged with additional setup and maintenance costs because we need to design new extraction queries and bots.

³ Within the number of pages and scrapers purchased.

⁴ Within the number of websites purchased. The exact number of URLs and web pages extract at the end of the month or project will depend on each project and the client’s specifications.

⁵ The subscription plans will auto-renew until you cancel it.

* Websites and pages have different structures and layouts. Furthermore, you may need to extract different data from some or every category/page. This requires designing and running different extraction bots (scrapers) for any of them. For example, when you want to extract data from Amazon, eBay, and Walmart, you need to design at least three different scrapers.

Note: All prices do not include VAT or other sales taxes. The Client is responsible for paying the sales tax/VAT when required by law. When the law will require WebRobot Ltd to charge for the sales tax/VAT, the Client will be informed during the purchase process.